Document analysis and Malware Sandboxes?

Post originally published on my old blog. It was automatically translated and as such may be poorly translated.

I recently had the opportunity to get my hands on a .doc file that was sent to me and that had been categorized using an antivirus heuristic as an infected file.

After being informed of the possible infection, the person who gave me the file simply said that other AVs did not identify it as being a malicious file and questioned the first analysis, implying that he believed that the file would not actually be infected.

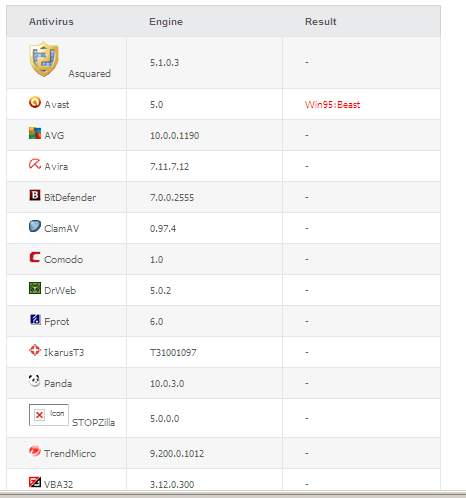

So I asked that person what he did to feel so safe to say that the document was not really infected. To my surprise I received an image of the result of an antivirus (AV) cloud:

Apparently the fact that only one AV solution detected the file as malicious did not seem to bother the person. The questions that came to mind then were:

Do other cloud and sandbox solutions have similar results?

Do they have the capacity to analyze documents of this type (Composite Document File V2, CDF)?

Do the tools warn you when they are unable to parse the document or simply check for a known hash base?

To answer the first question, I went after other cloud and sandbox solutions to see how they behaved.

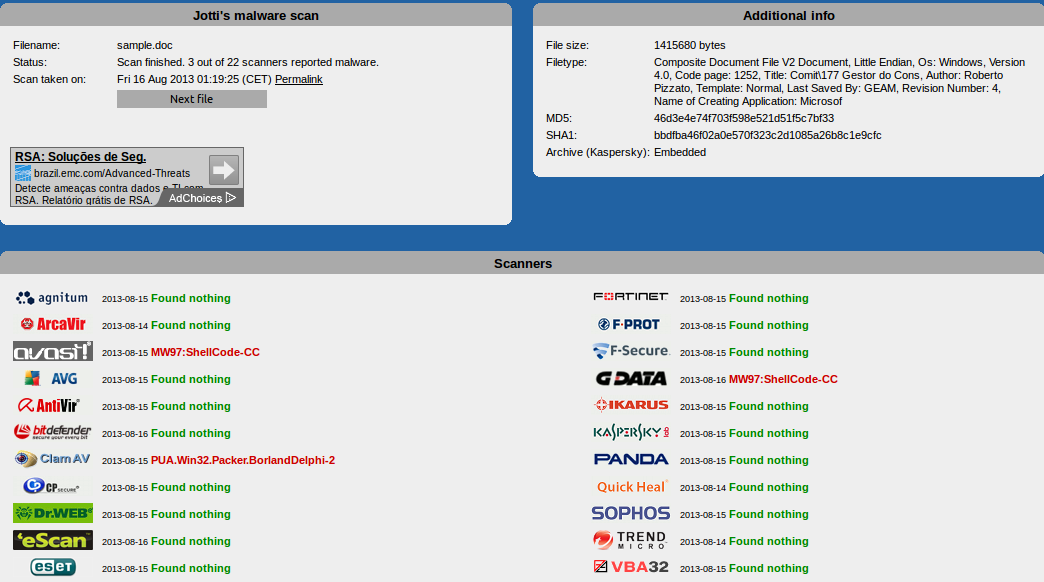

It is possible to notice that more than one solution has detected a threat and a coincidence between them; the categorization of the file: MW97: ShellCode. We will use this information later.

Unfortunately we don't know the engine version of these solutions.

It is relevant to note that the opensource solution brought two important pieces of information (if they are true):

PUA (Possibly Unwanted Application): This does not necessarily mean that the file is infected but that it has characteristics that can be used for “good” or “evil”. In this case, he identified a possible packer.

BorlandDelphi-2 : Is this an indication that possible malicious code was built using Borland Delphi? We will try to capture this information later.

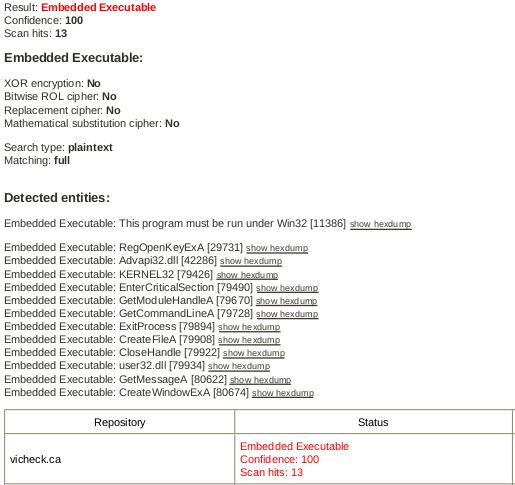

Now let's see another result:

This time we have a more “correct” result: 13 embedded codes were found . Among them, it is worth mentioning the calls to the following “routines”: KERNEL32, GetCommanLineA, CreateFileA, CreateWindowsExA. It is worth mentioning that this sandbox is specialized in analyzing malicious documents:

“An advanced malware detection engine designed to decrypt and extract malicious executables from common document formats such as MS Office Word, Powerpoint, Excel, Access, or Adobe PDF documents. ViCheck will detect the majority of embedded executables in documents as well as common exploits which download malware from the internet. ”

And to finish another analysis:

![]()

Now things are interesting! Another sandbox specializing in documents also found embedded codes and some of the so-called concurrences. In addition, executable files were downloaded .

I believe the answer to 1st question was answered: clouds and sandboxes do not always show similar results. I uploaded the document (file 1) in at least five more sandboxes. Unfortunately they were inconsistent. And it was not just a difference in nomenclature between them. Some actually said there was nothing in the file!

“Who's right?” it is a difficult question to be answered by the “ordinary” user. If we upload it to a cloud full of AVs, we have no way of knowing which ones actually performed the most appropriate verification because the method is almost never clear. Many AVs only check for file signatures in their database. Others have heuristic analysis. While others analyze the structure of the documents.

Now we come to the answer to the second question: only some clouds and sanboxes really have the capacity to analyze CDFs .

And now? How much can a person or company trust in these analyzes? Many companies that own these clouds are not responsible for these results (at least not in their “free” version). And several are simply unclear about their limitations and also do not warn the user, which is the answer to our third question. Just check the different sandboxes returned by google (and indicated in the references).

So… what is the way out???

Well… nothing better than the old “hands on”. In the next post we will crawl into a static analysis of the document.

Until then,

Bibliographic references: Check the original post linked before